As the IT world transitions to microservices and tools like Kubernetes are roaring, there is this one lingering issue that slowly comes full force. That is Combinatorial Explosion of versions of various microservices. Community expectation is that it is potentially much better than the dependency hell of the previous era. But nonetheless versioning of products built on microservices it is a pretty hard problem. To prove the point articles like “Give Me Back My Monolith” immediately come to mind.

If you’re wondering what this all is about, let me explain. Suppose your product consists of 10 microservices. Now suppose each of those microservices gets 1 new version. Just 1 version – we can all agree that sounds pretty trivial and insignificant. Now look back at our product. With just 1 new version of each component we now have 2^10 – that is 1024 permutations of how we can compose our product.

If this is not entirely clear, let me explain the math. We have 10 microservices, each has one update. So we have 2 possible versions for each microservice (either the old one or the updated one). Now, for each component we can use either one of those 2 versions. That is equivalent to having binary number with 10 places. In example, let’s say 1’s are new versions and 0’s are old versions so one possible permutation would be 1001000000 with 1st and 4th component updated and all others not. From math we know that binary number with 10 places has has 2^10 or 1024 variations. That is exactly the number we are dealing with here.

Now to continue with our thinking – what happens if we have 100 microservices and 10 possible versions each? Whole thing gets pretty ugly – it’s now 10^100 permutations – which is an enormous number. To me, it’s good to state it like this because now we’re not hiding behind words like “kubernetes”, but facing this hard problem face on.

Why I’m so captivated by this problem? Partly because coming from NLP / AI world – we were actively talking about the problem of combinatorial explosion in that field maybe 5-6 years ago. Just instead of versions we would have different words and instead of products we would have sentences and paragraphs. Now, while NLP and AI problem remains largely unsolved, a matter of fact is that substantial progress has been made recently (to me the progress could be faster if people would be a little less obsessed with machine learning and a little more considerant of other techniques – but that would be off-topic).

Back to the DevOps world of containers and microservices. We have this enormous elephant of a problem in the room and frequently what I hear is – just take kubernetes and helm and it’ll be fine. Guess what, it won’t be fine on its own. More so, closed-form solution for such problem is not feasible. Like in NLP, we should first approach this problem by limiting the search space – that is pruning outdated permutations.

One of the things that help – I mentioned last year in this blog about a need to keep minimal span of versions in production. Also it is important to note that good CI/CD process helps a lot in pruning variations. However, current state of CI/CD is not enough without proper accounting, tracking and tooling to handle actual permutations of components.

What we need is larger scale integration-stage experiments where we could establish risk factor per components, have some automated process to upgrade different components and test without human intervention to see what’s working and what’s not.

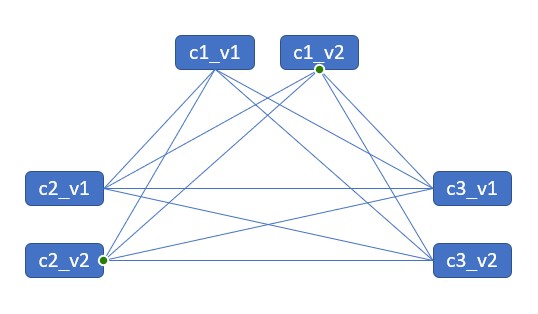

So the system could look like:

- Developers writing tests (this is crucial – because otherwise there is no reference point, it’s like labeling data in ML)

- Every component (project) has its own well-defined CI pipeline – this process is well established by now and CI problem per-component is largely solved

- “Smart Integration Engine” sits on top of various CI pipelines and assembles component projects into final product, runs the test and figures out shortest path to completion of desired features given present components and computes risk factors. If upgrades are not possible, such engine alerts Developers about best possible candidates and where it thinks things are failing. Again, tests are crucial – the integration engine uses tests as reference point.

- CD pipeline then pulls data from Smart Integration Engine and performs the actual roll-out. This completes the cycle.

In summary, to me one of the biggest pains right now is the lack of an integration engine that would mix various components into a product and thus allow for proper trace-ability of how things actually work in the complete product. I would appreciate thoughts on this (Spoiler alert – I’m currently working on Reliza to act as that “Smart Integration Engine”.)

One final thing I want to mention – to me monolith is not an answer for any project of substantial size. So I would be very skeptical of any attempt to actually improve lead times and quality of deliveries by going back to monolith. First, monolith has similar problem of dependency management between various libraries but it’s largely hidden in the development time. As a result, people can’t really make any changes in the monolith so whole process slows to a crawl.

Microservices make things better, but then they hit versioning explosion at the integration stage. Yes, essentially, we moved the same problem – from the dev stage to the integration stage. But, in my view, it is still better and teams actually perform faster with microservices (likely just because of a smaller batch size). Still, improvement we got so far by dismantling monoliths into microservices is not enough – version explosion of components is a huge problem with a lot of potential to make things better.

Link to discuss on HN.

Japanese translation by IT News: マイクロサービスにおけるバージョンの組み合わせ爆発

Chinese translation by InfoQ: 微服务——版本组合爆炸!

Russian translation by me on Habr.com: Микросервисы — комбинаторный взрыв версий

8 comments

Comments are closed.